CloudStack: Difference between revisions

Created page with "We are using [https://cloudstack.apache.org/ Apache CloudStack] to provide VMs-as-a-service to members. Our user documentation is here: https://docs.cloud.csclub.uwaterloo.ca..." |

No edit summary |

||

| (15 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

We are using [https://cloudstack.apache.org/ Apache CloudStack] to provide VMs-as-a-service to members. Our user documentation is here: https://docs.cloud.csclub.uwaterloo.ca |

We are using [https://cloudstack.apache.org/ Apache CloudStack] to provide VMs-as-a-service to members. Our user documentation is here: https://docs.cloud.csclub.uwaterloo.ca |

||

Prerequisite reading: |

|||

* [[Ceph]] |

|||

* [[Cloud Networking]] |

|||

Official CloudStack documentation: http://docs.cloudstack.apache.org/en/4.16.0.0/ |

|||

== Rebooting machines == |

|||

I'm going to start with this first because this is what future sysadmins are most interested in. If you reboot one of the CloudStack guest machines (as of this writing: biloba, ginkgo and chamomile), then I suggest you perform a live migration of all of the VMs on that host to the other other machines (see [[#Sequential reboot]]). |

|||

If this is not possible (e.g. there is not enough capacity on the other machines), then CloudStack will most likely shut down the VMs automatically. <b>You are responsible for restarting them manually after the reboot.</b> You will also need to manually restart any Kubernetes clusters. |

|||

Note: if the cloudstack-agent.service is having trouble reconnecting to the management servers after a reboot, just do a systemctl restart and cross your fingers. |

|||

=== Sequential reboot === |

|||

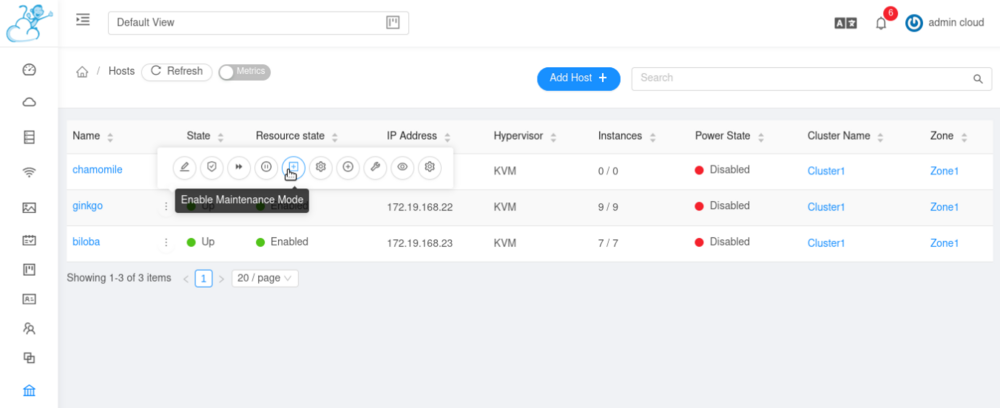

If it is possible to reboot the machines one at a time (e.g. for a software upgrade), then it is possible to avoid having any downtime. Login to the web UI as admin, go to Infrastructure > Hosts, hover above the three-dots button for a particular host, then press the "Enable Maintenance Mode" button. |

|||

[[File:Cloudstack-enable-maintenance-mode-button.png|1000px]] |

|||

<br><br> |

|||

Wait for the VMs to be migrated to the other machines (press the Refresh button to update the table). If you see an error which says "ErrorInPrepareForMaintenance", just wait it out. If more than 20 minutes have passed and there is still no progress, take the host out of maintenance mode, and put it back into maintenance mode. If this still does not work, restart the management server. |

|||

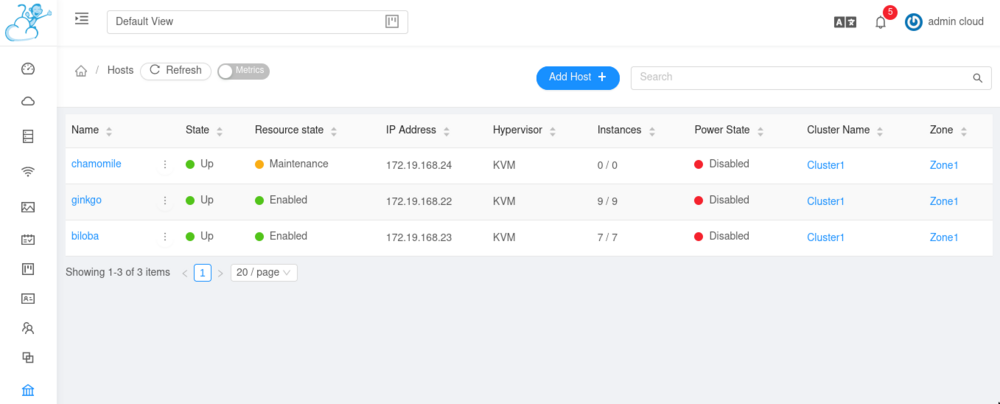

When a host is in maintenance mode, it should look like this: |

|||

[[File:Cloudstack-host-in-maintenance-mode.png|1000px]] |

|||

<br><br> |

|||

Once all VMs have been migrated, do whatever you need to do on the physical host; once it is back up, take it back out of maintenance mode from the web UI. Repeat for any other hosts which need to be taken offline. |

|||

== Unexpected reboot == |

|||

Sometimes a network interface fails on a machine after the switches in MC are rebooted (looking at you, riboflavin). Or a machine randomly goes offline in the middle of the night (looking at you, ginkgo). Point is, sometimes a machine needs to rebooted, or is forcefully rebooted, without preparation. Unfortunately, <strong>CloudStack is unable to recover gracefully from an unexpected reboot</strong>. This means that <strong>manual intervention is required</strong> to get the VMs back into a working state. |

|||

Once the machine has come back online, perform the following: |

|||

<ol> |

|||

<li>All of the VMs which were on that machine will eventually transition to the Stopped state. Wait for this to happen first (from the web UI).</li> |

|||

<li>Go to Infrastructure -> Management servers and make sure that both biloba and chamomile are present and running. If not, you may need to restart the management server on the machine (<code>systemctl restart cloudstack-management</code>). Watch the journald logs for any error messages.</li> |

|||

<li>Go to Infrastructure -> Hosts and make sure that all three hosts (biloba, chamomile and ginkgo) are present and running. If not, you may need to restart the agent on the machine (<code>systemctl restart cloudstack-agent</code>). Watch the journald logs for any error messages.</li> |

|||

<li>If you restart cloudstack-agent, restart virtlogd as well, just for good measure. Watch the journald logs for any error messages.</li> |

|||

<li>Restart ONE of the stopped VMs and make sure that it transitions to the Started state. If more than 20 minutes pass and it still hasn't started, restart the management servers and try again.</li> |

|||

<li>Restart the rest of the stopped VMs.</li> |

|||

</ol> |

|||

== Administration == |

|||

To login with the admin account, use the following credentials in the web UI |

|||

<ul> |

|||

<li>Username: admin</li> |

|||

<li>Password: <i>stored in the usual place</i></li> |

|||

<li>Domain: <i>leave this empty</i></li> |

|||

</ul> |

|||

There is another admin account for the Members domain. This is necessary to create projects in the Members domain which regular members can access. Note that his account has fewer privileges than the root admin account above (it has the DomainAdmin role instead of the RootAdmin role). |

|||

<ul> |

|||

<li>Username: membersadmin</li> |

|||

<li>Password: <i>stored in the usual place</i></li> |

|||

<li>Domain: Members |

|||

</ul> |

|||

Note that there are two management servers, one on each of biloba and chamomile (chamomile is a hot standby for biloba). If you restart one of them, you should restart the other as well. |

|||

=== CLI === |

|||

CloudStack has a CLI called [https://github.com/apache/cloudstack-cloudmonkey cloudmonkey] which is already set up on biloba. Just run <code>cmk</code> as root to start it up. |

|||

Cloudmonkey is basically a shell for the API (https://cloudstack.apache.org/api/apidocs-4.16/). For example, to list all domains: |

|||

<pre> |

|||

listDomains details=min |

|||

</pre> |

|||

Run <code>somecommand -h</code> to see all parameters for a particular command (or browse the API documentation). |

|||

See https://github.com/apache/cloudstack-cloudmonkey for more details. |

|||

== Building packages == |

== Building packages == |

||

| Line 87: | Line 152: | ||

dupload cloudstack_4.16.0.0+1_amd64.changes |

dupload cloudstack_4.16.0.0+1_amd64.changes |

||

</pre> |

</pre> |

||

== Incompatibility with Debian 12 packages == |

|||

After upgrading ginkgo to bookworm, we discovered that libvirt 8+ was incompatible with CloudStack 4.16.0.0. See https://www.shapeblue.com/advisory-on-libvirt-8-compatibility-issues-with-cloudstack/ for details. So we built new packages from the 4.16.1.0 branch of ShapeBlue's GitHub repository. For some reason the cloudstack-management process failed with some errors from SLF4J, so we needed to download some JARs: |

|||

<pre> |

|||

wget -O /usr/share/cloudstack-management/lib/log4j-1.2.17.jar https://repo1.maven.org/maven2/log4j/log4j/1.2.17/log4j-1.2.17.jar |

|||

wget -O /usr/share/cloudstack-management/lib/slf4j-log4j12-1.6.6.jar https://repo1.maven.org/maven2/org/slf4j/slf4j-log4j12/1.6.6/slf4j-log4j12-1.6.6.jar |

|||

</pre> |

|||

See https://stackoverflow.com/a/70528383 for details. |

|||

We also encountered some kind of Java 11 -> 17 incompatibility issue, so following parameters were added to the JAVA_OPTS variable in /etc/default/cloudstack-management: |

|||

<pre> |

|||

--add-opens java.base/java.lang=ALL-UNNAMED |

|||

</pre> |

|||

See https://stackoverflow.com/a/41265267 for details. Note that this file is NOT a shell script so you cannot use variable interpolation. You must modify the value of JAVA_OPTS directly. |

|||

== Database setup == |

|||

We are using master-master replication between two MariaDB instances on biloba and chamomile. See [https://mariadb.com/kb/en/setting-up-replication/ here] and [https://tunnelix.com/simple-master-master-replication-on-mariadb/ here] for instructions on how to set this up. |

|||

To avoid split-brain syndrome, mariadb.cloud.csclub.uwaterloo.ca points to a virtual IP shared by biloba and chamomile via keepalived. This means that only one host is actually handling requests at any moment; the other is a hot standby. |

|||

Also add the following parameters to /etc/mysql/my.cnf on the hosts running MariaDB: |

|||

<pre> |

|||

[mysqld] |

|||

innodb_rollback_on_timeout=1 |

|||

innodb_lock_wait_timeout=600 |

|||

max_connections=350 |

|||

log-bin=mysql-bin |

|||

binlog-format = 'ROW' |

|||

</pre> |

|||

Also comment out (or remove) the following line in /etc/mysql/mariadb.conf.d/50-server.cnf: |

|||

<pre> |

|||

bind-address = 127.0.0.1 |

|||

</pre> |

|||

Now restart MariaDB. |

|||

== Management server setup == |

|||

Install the management server from our Debian repository: |

|||

<pre> |

|||

apt install cloudstack-management |

|||

</pre> |

|||

Run the database scripts: |

|||

<pre> |

|||

cloudstack-setup-databases cloud:password@localhost --deploy-as=root |

|||

</pre> |

|||

(Replace 'password' by a strong password.) |

|||

Open /etc/cloudstack/management/db.properties and replace all instances of 'localhost' by 'mariadb.cloud.csclub.uwaterloo.ca'. |

|||

Open /etc/cloudstack/management/server.properties and set 'bind-interface' to 127.0.0.1 (CloudStack is being reverse proxied behind NGINX). |

|||

Run some more scripts: |

|||

<pre> |

|||

cloudstack-setup-management |

|||

</pre> |

|||

Mount the cloudstack-secondary CephFS volume at /mnt/cloudstack-secondary: |

|||

<pre> |

|||

mkdir /mnt/cloudstack-secondary |

|||

mount -t nfs4 -o port=2049 ceph-nfs.cloud.csclub.uwaterloo.ca:/cloudstack-secondary /mnt/cloudstack-secondary |

|||

</pre> |

|||

Now download the management VM template: |

|||

<pre> |

|||

/usr/share/cloudstack-common/scripts/storage/secondary/cloud-install-sys-tmplt -m /mnt/cloudstack-secondary/ -u https://download.cloudstack.org/systemvm/4.16/systemvmtemplate-4.16.0-kvm.qcow2.bz2 -h kvm -F |

|||

</pre> |

|||

The management server will run on port 8080 by default, so reverse proxy it from NGINX: |

|||

<pre> |

|||

location / { |

|||

proxy_pass http://localhost:8080; |

|||

} |

|||

</pre> |

|||

== Compute node setup == |

|||

Install packages: |

|||

<pre> |

|||

apt install cloudstack-agent libvirt-daemon-driver-storage-rbd qemu-block-extra |

|||

</pre> |

|||

Create a new user for CloudStack: |

|||

<pre> |

|||

useradd -s /bin/bash -d /nonexistent -M cloudstack |

|||

# set the password |

|||

passwd cloudstack |

|||

</pre> |

|||

Add the following to /etc/sudoers: |

|||

<pre> |

|||

cloudstack ALL=(ALL) NOPASSWD:ALL |

|||

Defaults:cloudstack !requiretty |

|||

</pre> |

|||

(There is a way to restrict this, but I was never able to get it to work.) |

|||

=== Network setup === |

|||

The /etc/network/interfaces file should look something like this (taking ginkgo as an example): |

|||

<pre> |

|||

auto enp3s0f0 |

|||

iface enp3s0f0 inet manual |

|||

auto ens1f0np0 |

|||

iface ens1f0np0 inet manual |

|||

# csc-cloud management |

|||

auto enp3s0f0.529 |

|||

iface enp3s0f0.529 inet manual |

|||

auto br529 |

|||

iface br529 inet static |

|||

bridge_ports enp3s0f0.529 |

|||

address 172.19.168.22/27 |

|||

iface br529 inet6 static |

|||

bridge_ports enp3s0f0.529 |

|||

address fd74:6b6a:8eca:4902::22/64 |

|||

# csc-cloud provider |

|||

auto ens1f0np0.425 |

|||

iface ens1f0np0.425 inet manual |

|||

auto br425 |

|||

iface br425 inet manual |

|||

bridge_ports ens1f0np0.425 |

|||

# csc server network |

|||

auto ens1f0np0.134 |

|||

iface ens1f0np0.134 inet manual |

|||

auto br134 |

|||

iface br134 inet static |

|||

bridge_ports ens1f0np0.134 |

|||

address 129.97.134.148/24 |

|||

gateway 129.97.134.1 |

|||

iface br134 inet6 static |

|||

bridge_ports ens1f0np0.134 |

|||

address 2620:101:f000:4901:c5c::148/64 |

|||

gateway 2620:101:f000:4901::1 |

|||

</pre> |

|||

Add/modify the following lines to /etc/cloudstack/agent.properties: |

|||

<pre> |

|||

private.network.device=br529 |

|||

guest.network.device=br425 |

|||

public.network.device=br425 |

|||

host=172.19.168.23,172.19.168.24@static |

|||

</pre> |

|||

=== libvirtd setup === |

|||

Add/modify the following lines in /etc/libvirt/libvirtd.conf: |

|||

<pre> |

|||

listen_tls = 0 |

|||

listen_tcp = 1 |

|||

tcp_port = "16509" |

|||

auth_tcp = "none" |

|||

mdns_adv = 0 |

|||

</pre> |

|||

Uncomment the following line in /etc/default/libvirtd: |

|||

<pre> |

|||

LIBVIRTD_ARGS="--listen" |

|||

</pre> |

|||

Make sure the following lines are present in /etc/libvirt/qemu.conf: |

|||

<pre> |

|||

security_driver="none" |

|||

user="root" |

|||

group="root" |

|||

</pre> |

|||

Now run: |

|||

<pre> |

|||

systemctl mask libvirtd.socket |

|||

systemctl mask libvirtd-ro.socket |

|||

systemctl mask libvirtd-admin.socket |

|||

systemctl restart libvirtd |

|||

</pre> |

|||

== Management server setup (cont'd) == |

|||

Now start the cloudstack-management systemd service and visit the web UI (https://cloud.csclub.uwaterloo.ca). The login credentials are 'admin' for both the username and password. Start the setup walkthrough (you will be prompted to change the password). Make sure to choose Basic Networking. |

|||

The walkthrough is almost certainly going to fail (at least, it did for me). Don't panic when this happens; just abort the walkthrough, and set up everything else manually. Once primary and secondary storage have been setup, and at least one host has been added, enable the Pod, Cluster and Zone (there should only be one of each). |

|||

=== Primary Storage === |

|||

* Type: RBD |

|||

* IP address: ceph-mon.cloud.csclub.uwaterloo.ca |

|||

* Scope: zone |

|||

* Get the credentials which you created in [[Ceph#CloudStack_Primary_Storage]] |

|||

=== Secondary Storage === |

|||

* Type: NFS |

|||

* Host: ceph-nfs.cloud.csclub.uwaterloo.ca:2049 |

|||

* Path: /cloudstack-secondary |

|||

=== Global settings === |

|||

Some global settings which you'll need to set from the web UI: |

|||

* ca.plugin.root.auth.strictness: false (this always caused issues for me, so I just disabled it) |

|||

* host: 172.19.168.23,172.19.168.24 (the VLAN 529 addresses of biloba and chamomile) |

|||

=== Adding a host === |

|||

This is an extremely painful process which I am almost certainly doing wrong. It usually takes me 7-8 attempts to add a single host (that's not an exaggeration). This is what it looks like: |

|||

* Stop cloudstack-agent service |

|||

* Configure /etc/cloudstack-agent/agent.properties |

|||

* Add a host from the CloudStack UI |

|||

* Start cloudstack-agent.service |

|||

The reason why this takes several attempts is because cloudstack-agent actually <i>overwrites</i> your agent.properties file. If/when you notice that this happens, restart the whole process again. |

|||

=== Accessing the System VMs === |

|||

If you need to SSH into one of the System VMs, get its link-local address from the web UI, and run e.g. |

|||

<pre> |

|||

ssh -i /var/lib/cloudstack/management/.ssh/id_rsa -p 3922 root@169.254.232.179 |

|||

</pre> |

|||

=== Some more global settings === |

|||

<pre> |

|||

allow.user.expunge.recover.vm = true |

|||

allow.user.view.destroyed.vm = true |

|||

expunge.delay = 1 |

|||

expunge.interval = 1 |

|||

network.securitygroups.defaultadding = false |

|||

allow.public.user.templates = false |

|||

vm.network.throttling.rate = 0 |

|||

network.throttling.rate = 0 |

|||

cpu.overprovisioning.factor = 4.0 |

|||

allow.user.create.projects = false |

|||

max.project.cpus = 8 |

|||

max.project.memory = 8192 |

|||

max.project.primary.storage = 40 |

|||

max.projet.secondary.storage = 20 |

|||

max.account.cpus = 8 |

|||

max.account.memory = 8192 |

|||

max.account.primary.storage = 40 |

|||

max.account.secondary.storage = 20 |

|||

</pre> |

|||

<b>NOTE</b>: the <code>cpu.overprovisioning.factor</code> setting also needs to be set for existing clusters. Go to Infrastructure -> Clusters -> Cluster1 -> Settings and set it accordingly. |

|||

=== Firewall === |

|||

Since we disabled certificate validation from the clients, we're going to use some iptables-fu on all of the CloudStack hosts (to make our lives easier, we're going to use the same rules on the management and agent servers): |

|||

<pre> |

|||

iptables -N CLOUDSTACK-SERVICES |

|||

iptables -A INPUT -j CLOUDSTACK-SERVICES |

|||

iptables -A CLOUDSTACK-SERVICES -i lo -j RETURN |

|||

iptables -A CLOUDSTACK-SERVICES -s 172.19.168.0/27 -j RETURN |

|||

iptables -A CLOUDSTACK-SERVICES -p tcp -m multiport --dports 16509,16514,45335,41047,8250 -j REJECT |

|||

iptables-save > /etc/iptables/rules.v4 |

|||

ip6tables -N CLOUDSTACK-SERVICES |

|||

ip6tables -A INPUT -j CLOUDSTACK-SERVICES |

|||

ip6tables -A CLOUDSTACK-SERVICES -i lo -j RETURN |

|||

ip6tables -A CLOUDSTACK-SERVICES -s fd74:6b6a:8eca:4902::/64 -j RETURN |

|||

ip6tables -A CLOUDSTACK-SERVICES -p tcp -m multiport --dports 16509,16514,45335,41047,8250 -j REJECT |

|||

ip6tables-save > /etc/iptables/rules.v6 |

|||

</pre> |

|||

=== LDAP authentication === |

|||

Go to Global Settings in the UI, type 'ldap' in the search bar, and configure the parameters as needed. Make sure the mail attribute is set to 'mailLocalAddress'. |

|||

Create a new domain called 'Members'. Then go to 'LDAP Configuration', click the 'Configure LDAP +' button, and add a new LDAP config linked to the domain you just created. |

|||

[[ceo]] handles the creation of CloudStack accounts, so create an API key + secret token and add it to /etc/csc/ceod.ini on biloba. |

|||

=== Templates === |

|||

This deserves an entire page of its own - see [[CloudStack Templates]]. |

|||

=== Kubernetes === |

|||

This deserves an entire page of its own - see [[Kubernetes]]. |

|||

== Upgrading CloudStack == |

|||

Please be <b>extremely</b> careful if you decide to upgrade CloudStack. The last time I tried to perform an upgrade (from 4.15 to 4.16), the agents refused to connect to the management servers (or maybe it was the other way around?), and I ended up having to <b>wipe the entire CloudStack installation clean and start again from scratch</b>. Therefore it is fair to say that nobody has ever managed to successfully upgrade CloudStack on our machines. Do this at your own risk. |

|||

If you decide to perform an upgrade, then at the very least, you will need to backup the MariaDB databases ('cloud' and 'cloud_usage'), as well as the /etc/cloudstack and /var/lib/cloudstack folders on each of biloba, chamomile and ginkgo. Also, good luck. |

|||

== Siracha Crash Out Notes == |

|||

If you ever find your self in the unfourtunate position of needing to recover from a drive failure. It's JOEVER BRO TRUST ME. |

|||

Anyways this is what I've learned from the pits of hell |

|||

# Our nginx config is funny, and it redirects 9987 → gunicorn → ceod instance running on server |

|||

## If the drive dies, ask someone with a backup for just a zip file, it's not possible to recover. Trust me |

|||

# you need to reload both nginx & cloudstack to get it running |

|||

# You need the crt to get it working (stored under `/etc/csc/k8s` (I think) |

|||

== Crash Out Notes Cont. == |

|||

The user console on CloudStack was not working. |

|||

In Chamomile <code>/etc/nginx/sites-enabled</code>, you can see a list of all user websites that are enabled. There's a file entitled <code>consoleproxy.cloud.csclub.uwaterloo.ca</code> in which you will find that the console proxy is being hosted on the VM with IP <code>172.19.134.59</code> on CloudStack. |

|||

Run the following command whilst in Chamomile to access the console proxy: |

|||

<code>sudo ssh -i /var/lib/cloudstack/management/.ssh/id_rsa -p 3922 169.254.35.150</code> |

|||

More information on troubleshooting the console and console proxy can be found [https://cwiki.apache.org/confluence/display/CLOUDSTACK/View+Console+and+Console+Proxy+Troubleshooting here.] |

|||

Latest revision as of 14:55, 1 July 2025

We are using Apache CloudStack to provide VMs-as-a-service to members. Our user documentation is here: https://docs.cloud.csclub.uwaterloo.ca

Prerequisite reading:

Official CloudStack documentation: http://docs.cloudstack.apache.org/en/4.16.0.0/

Rebooting machines

I'm going to start with this first because this is what future sysadmins are most interested in. If you reboot one of the CloudStack guest machines (as of this writing: biloba, ginkgo and chamomile), then I suggest you perform a live migration of all of the VMs on that host to the other other machines (see #Sequential reboot).

If this is not possible (e.g. there is not enough capacity on the other machines), then CloudStack will most likely shut down the VMs automatically. You are responsible for restarting them manually after the reboot. You will also need to manually restart any Kubernetes clusters.

Note: if the cloudstack-agent.service is having trouble reconnecting to the management servers after a reboot, just do a systemctl restart and cross your fingers.

Sequential reboot

If it is possible to reboot the machines one at a time (e.g. for a software upgrade), then it is possible to avoid having any downtime. Login to the web UI as admin, go to Infrastructure > Hosts, hover above the three-dots button for a particular host, then press the "Enable Maintenance Mode" button.

Wait for the VMs to be migrated to the other machines (press the Refresh button to update the table). If you see an error which says "ErrorInPrepareForMaintenance", just wait it out. If more than 20 minutes have passed and there is still no progress, take the host out of maintenance mode, and put it back into maintenance mode. If this still does not work, restart the management server.

When a host is in maintenance mode, it should look like this:

Once all VMs have been migrated, do whatever you need to do on the physical host; once it is back up, take it back out of maintenance mode from the web UI. Repeat for any other hosts which need to be taken offline.

Unexpected reboot

Sometimes a network interface fails on a machine after the switches in MC are rebooted (looking at you, riboflavin). Or a machine randomly goes offline in the middle of the night (looking at you, ginkgo). Point is, sometimes a machine needs to rebooted, or is forcefully rebooted, without preparation. Unfortunately, CloudStack is unable to recover gracefully from an unexpected reboot. This means that manual intervention is required to get the VMs back into a working state.

Once the machine has come back online, perform the following:

- All of the VMs which were on that machine will eventually transition to the Stopped state. Wait for this to happen first (from the web UI).

- Go to Infrastructure -> Management servers and make sure that both biloba and chamomile are present and running. If not, you may need to restart the management server on the machine (

systemctl restart cloudstack-management). Watch the journald logs for any error messages. - Go to Infrastructure -> Hosts and make sure that all three hosts (biloba, chamomile and ginkgo) are present and running. If not, you may need to restart the agent on the machine (

systemctl restart cloudstack-agent). Watch the journald logs for any error messages. - If you restart cloudstack-agent, restart virtlogd as well, just for good measure. Watch the journald logs for any error messages.

- Restart ONE of the stopped VMs and make sure that it transitions to the Started state. If more than 20 minutes pass and it still hasn't started, restart the management servers and try again.

- Restart the rest of the stopped VMs.

Administration

To login with the admin account, use the following credentials in the web UI

- Username: admin

- Password: stored in the usual place

- Domain: leave this empty

There is another admin account for the Members domain. This is necessary to create projects in the Members domain which regular members can access. Note that his account has fewer privileges than the root admin account above (it has the DomainAdmin role instead of the RootAdmin role).

- Username: membersadmin

- Password: stored in the usual place

- Domain: Members

Note that there are two management servers, one on each of biloba and chamomile (chamomile is a hot standby for biloba). If you restart one of them, you should restart the other as well.

CLI

CloudStack has a CLI called cloudmonkey which is already set up on biloba. Just run cmk as root to start it up.

Cloudmonkey is basically a shell for the API (https://cloudstack.apache.org/api/apidocs-4.16/). For example, to list all domains:

listDomains details=min

Run somecommand -h to see all parameters for a particular command (or browse the API documentation).

See https://github.com/apache/cloudstack-cloudmonkey for more details.

Building packages

While CloudStack does provide .deb packages for Ubuntu, unfortunately these don't work on Debian (the 'qemu-kvm' dependency is a virtual package on Debian, but not on Ubuntu). So we're going to build our own packages instead.

We're going to perform the build in a Podman container to avoid polluting the host machine with unnecessary packages. There's a container called cloudstack-build on biloba which you can re-use. If you create a new container, make sure to use the same Podman image as the release for which you're building (e.g. 'debian:bullseye').

The instructions below are adapted from http://docs.cloudstack.apache.org/en/latest/installguide/building_from_source.html

Inside the container, install the dependencies:

apt install maven openjdk-11-jdk libws-commons-util-java libcommons-codec-java libcommons-httpclient-java liblog4j1.2-java genisoimage devscripts debhelper python3-setuptools

Install Node.js 12 as well (Debian bullseye's version happens to be 12):

apt install nodejs npm

Build the node-sass module (see this issue to see why this is necessary):

cd ui && npm install && npm rebuild node-sass && cd ..

The python3-mysql.connector package is not available in bullseye, so we're going to download and install it from the sid release:

curl -LOJ http://ftp.ca.debian.org/debian/pool/main/m/mysql-connector-python/python3-mysql.connector_8.0.15-2_all.deb apt install ./python3-mysql.connector_8.0.15-2_all.deb

Download the CloudStack source code:

curl -LOJ http://mirror.csclub.uwaterloo.ca/apache/cloudstack/releases/4.16.0.0/apache-cloudstack-4.16.0.0-src.tar.bz2 tar -jxvf apache-cloudstack-4.16.0.0-src.tar.bz2 cd apache-cloudstack-4.16.0.0-src

Download the Maven dependencies:

mvn -P deps

Now open debian/control and perform the following changes:

- Replace 'qemu-kvm (>=2.5)' with 'qemu-system-x86 (>= 1:5.2)' in the dependencies of cloudstack-agent

- Remove dh-systemd as a build dependency of cloudstack (it's included in debhelper)

Now open debian/rules and add the following flags to the mvn command:

-Dmaven.test.skip=true -Dclean.skip=true -Dcheckstyle.skip

Now open debian/changelog and change 'unstable' to 'bullseye'.

As of this writing, there is a bug in libvirt which prevents VMs with more than 4GB of RAM from being created on hosts with cgroups2. Until that issue is fixed, we're going to need to modify the source code. Since we're already building a custom CloudStack package, it's easier to patch CloudStack than to patch libvirt, so paste something like the following into debian/patches/fix-cgroups2-cpu-weight.patch:

Description: Workaround for libvirt trying to write a value to the cgroups v2

cpu.weight controller which is greater than the maximum (10000). The

libvirt developers are currently discussing a solution.

Forwarded: not-needed

Origin: upstream, https://gitlab.com/libvirt/libvirt/-/issues/161

Author: Max Erenberg <merenber@csclub.uwaterloo.ca>

Last-Update: 2021-12-03

Index: apache-cloudstack-4.16.0.0-src/plugins/hypervisors/kvm/src/main/java/com/cloud/hypervisor/kvm/resource/LibvirtVMDef.java

===================================================================

--- apache-cloudstack-4.16.0.0-src.orig/plugins/hypervisors/kvm/src/main/java/com/cloud/hypervisor/kvm/resource/LibvirtVMDef.java

+++ apache-cloudstack-4.16.0.0-src/plugins/hypervisors/kvm/src/main/java/com/cloud/hypervisor/kvm/resource/LibvirtVMDef.java

@@ -1483,6 +1483,10 @@ public class LibvirtVMDef {

static final int MAX_PERIOD = 1000000;

public void setShares(int shares) {

+ // Clamp the value to the cgroups v2 cpu.weight maximum until

+ // upstream libvirt gets fixed:

+ // https://gitlab.com/libvirt/libvirt/-/issues/161

+ shares = Math.min(shares, 10000);

_shares = shares;

}

I think you have to manually modify that LibvirtVMDef.java file to incorporate those changes (I could be wrong on this, but that's how I did it).

Then paste the following into debian/patches/00list:

fix-cgroup2-cpu-weight

Finally, import your GPG key into the container (make sure to delete it afterwards!), and build the packages:

debuild -k<YOUR_GPG_KEY_ID>

There should already be a .dupload.conf in the /root directory in the cloudstack-build container; if you need need another copy, ask a syscom member. Open /root/.ssh/config and change the User parameter to your username. Finally, go to /root and upload the packages to potassium-benzoate (replace the version number):

dupload cloudstack_4.16.0.0+1_amd64.changes

Incompatibility with Debian 12 packages

After upgrading ginkgo to bookworm, we discovered that libvirt 8+ was incompatible with CloudStack 4.16.0.0. See https://www.shapeblue.com/advisory-on-libvirt-8-compatibility-issues-with-cloudstack/ for details. So we built new packages from the 4.16.1.0 branch of ShapeBlue's GitHub repository. For some reason the cloudstack-management process failed with some errors from SLF4J, so we needed to download some JARs:

wget -O /usr/share/cloudstack-management/lib/log4j-1.2.17.jar https://repo1.maven.org/maven2/log4j/log4j/1.2.17/log4j-1.2.17.jar wget -O /usr/share/cloudstack-management/lib/slf4j-log4j12-1.6.6.jar https://repo1.maven.org/maven2/org/slf4j/slf4j-log4j12/1.6.6/slf4j-log4j12-1.6.6.jar

See https://stackoverflow.com/a/70528383 for details.

We also encountered some kind of Java 11 -> 17 incompatibility issue, so following parameters were added to the JAVA_OPTS variable in /etc/default/cloudstack-management:

--add-opens java.base/java.lang=ALL-UNNAMED

See https://stackoverflow.com/a/41265267 for details. Note that this file is NOT a shell script so you cannot use variable interpolation. You must modify the value of JAVA_OPTS directly.

Database setup

We are using master-master replication between two MariaDB instances on biloba and chamomile. See here and here for instructions on how to set this up.

To avoid split-brain syndrome, mariadb.cloud.csclub.uwaterloo.ca points to a virtual IP shared by biloba and chamomile via keepalived. This means that only one host is actually handling requests at any moment; the other is a hot standby.

Also add the following parameters to /etc/mysql/my.cnf on the hosts running MariaDB:

[mysqld] innodb_rollback_on_timeout=1 innodb_lock_wait_timeout=600 max_connections=350 log-bin=mysql-bin binlog-format = 'ROW'

Also comment out (or remove) the following line in /etc/mysql/mariadb.conf.d/50-server.cnf:

bind-address = 127.0.0.1

Now restart MariaDB.

Management server setup

Install the management server from our Debian repository:

apt install cloudstack-management

Run the database scripts:

cloudstack-setup-databases cloud:password@localhost --deploy-as=root

(Replace 'password' by a strong password.)

Open /etc/cloudstack/management/db.properties and replace all instances of 'localhost' by 'mariadb.cloud.csclub.uwaterloo.ca'.

Open /etc/cloudstack/management/server.properties and set 'bind-interface' to 127.0.0.1 (CloudStack is being reverse proxied behind NGINX).

Run some more scripts:

cloudstack-setup-management

Mount the cloudstack-secondary CephFS volume at /mnt/cloudstack-secondary:

mkdir /mnt/cloudstack-secondary mount -t nfs4 -o port=2049 ceph-nfs.cloud.csclub.uwaterloo.ca:/cloudstack-secondary /mnt/cloudstack-secondary

Now download the management VM template:

/usr/share/cloudstack-common/scripts/storage/secondary/cloud-install-sys-tmplt -m /mnt/cloudstack-secondary/ -u https://download.cloudstack.org/systemvm/4.16/systemvmtemplate-4.16.0-kvm.qcow2.bz2 -h kvm -F

The management server will run on port 8080 by default, so reverse proxy it from NGINX:

location / {

proxy_pass http://localhost:8080;

}

Compute node setup

Install packages:

apt install cloudstack-agent libvirt-daemon-driver-storage-rbd qemu-block-extra

Create a new user for CloudStack:

useradd -s /bin/bash -d /nonexistent -M cloudstack # set the password passwd cloudstack

Add the following to /etc/sudoers:

cloudstack ALL=(ALL) NOPASSWD:ALL Defaults:cloudstack !requiretty

(There is a way to restrict this, but I was never able to get it to work.)

Network setup

The /etc/network/interfaces file should look something like this (taking ginkgo as an example):

auto enp3s0f0

iface enp3s0f0 inet manual

auto ens1f0np0

iface ens1f0np0 inet manual

# csc-cloud management

auto enp3s0f0.529

iface enp3s0f0.529 inet manual

auto br529

iface br529 inet static

bridge_ports enp3s0f0.529

address 172.19.168.22/27

iface br529 inet6 static

bridge_ports enp3s0f0.529

address fd74:6b6a:8eca:4902::22/64

# csc-cloud provider

auto ens1f0np0.425

iface ens1f0np0.425 inet manual

auto br425

iface br425 inet manual

bridge_ports ens1f0np0.425

# csc server network

auto ens1f0np0.134

iface ens1f0np0.134 inet manual

auto br134

iface br134 inet static

bridge_ports ens1f0np0.134

address 129.97.134.148/24

gateway 129.97.134.1

iface br134 inet6 static

bridge_ports ens1f0np0.134

address 2620:101:f000:4901:c5c::148/64

gateway 2620:101:f000:4901::1

Add/modify the following lines to /etc/cloudstack/agent.properties:

private.network.device=br529 guest.network.device=br425 public.network.device=br425 host=172.19.168.23,172.19.168.24@static

libvirtd setup

Add/modify the following lines in /etc/libvirt/libvirtd.conf:

listen_tls = 0 listen_tcp = 1 tcp_port = "16509" auth_tcp = "none" mdns_adv = 0

Uncomment the following line in /etc/default/libvirtd:

LIBVIRTD_ARGS="--listen"

Make sure the following lines are present in /etc/libvirt/qemu.conf:

security_driver="none" user="root" group="root"

Now run:

systemctl mask libvirtd.socket systemctl mask libvirtd-ro.socket systemctl mask libvirtd-admin.socket systemctl restart libvirtd

Management server setup (cont'd)

Now start the cloudstack-management systemd service and visit the web UI (https://cloud.csclub.uwaterloo.ca). The login credentials are 'admin' for both the username and password. Start the setup walkthrough (you will be prompted to change the password). Make sure to choose Basic Networking.

The walkthrough is almost certainly going to fail (at least, it did for me). Don't panic when this happens; just abort the walkthrough, and set up everything else manually. Once primary and secondary storage have been setup, and at least one host has been added, enable the Pod, Cluster and Zone (there should only be one of each).

Primary Storage

- Type: RBD

- IP address: ceph-mon.cloud.csclub.uwaterloo.ca

- Scope: zone

- Get the credentials which you created in Ceph#CloudStack_Primary_Storage

Secondary Storage

- Type: NFS

- Host: ceph-nfs.cloud.csclub.uwaterloo.ca:2049

- Path: /cloudstack-secondary

Global settings

Some global settings which you'll need to set from the web UI:

- ca.plugin.root.auth.strictness: false (this always caused issues for me, so I just disabled it)

- host: 172.19.168.23,172.19.168.24 (the VLAN 529 addresses of biloba and chamomile)

Adding a host

This is an extremely painful process which I am almost certainly doing wrong. It usually takes me 7-8 attempts to add a single host (that's not an exaggeration). This is what it looks like:

- Stop cloudstack-agent service

- Configure /etc/cloudstack-agent/agent.properties

- Add a host from the CloudStack UI

- Start cloudstack-agent.service

The reason why this takes several attempts is because cloudstack-agent actually overwrites your agent.properties file. If/when you notice that this happens, restart the whole process again.

Accessing the System VMs

If you need to SSH into one of the System VMs, get its link-local address from the web UI, and run e.g.

ssh -i /var/lib/cloudstack/management/.ssh/id_rsa -p 3922 root@169.254.232.179

Some more global settings

allow.user.expunge.recover.vm = true allow.user.view.destroyed.vm = true expunge.delay = 1 expunge.interval = 1 network.securitygroups.defaultadding = false allow.public.user.templates = false vm.network.throttling.rate = 0 network.throttling.rate = 0 cpu.overprovisioning.factor = 4.0 allow.user.create.projects = false max.project.cpus = 8 max.project.memory = 8192 max.project.primary.storage = 40 max.projet.secondary.storage = 20 max.account.cpus = 8 max.account.memory = 8192 max.account.primary.storage = 40 max.account.secondary.storage = 20

NOTE: the cpu.overprovisioning.factor setting also needs to be set for existing clusters. Go to Infrastructure -> Clusters -> Cluster1 -> Settings and set it accordingly.

Firewall

Since we disabled certificate validation from the clients, we're going to use some iptables-fu on all of the CloudStack hosts (to make our lives easier, we're going to use the same rules on the management and agent servers):

iptables -N CLOUDSTACK-SERVICES iptables -A INPUT -j CLOUDSTACK-SERVICES iptables -A CLOUDSTACK-SERVICES -i lo -j RETURN iptables -A CLOUDSTACK-SERVICES -s 172.19.168.0/27 -j RETURN iptables -A CLOUDSTACK-SERVICES -p tcp -m multiport --dports 16509,16514,45335,41047,8250 -j REJECT iptables-save > /etc/iptables/rules.v4 ip6tables -N CLOUDSTACK-SERVICES ip6tables -A INPUT -j CLOUDSTACK-SERVICES ip6tables -A CLOUDSTACK-SERVICES -i lo -j RETURN ip6tables -A CLOUDSTACK-SERVICES -s fd74:6b6a:8eca:4902::/64 -j RETURN ip6tables -A CLOUDSTACK-SERVICES -p tcp -m multiport --dports 16509,16514,45335,41047,8250 -j REJECT ip6tables-save > /etc/iptables/rules.v6

LDAP authentication

Go to Global Settings in the UI, type 'ldap' in the search bar, and configure the parameters as needed. Make sure the mail attribute is set to 'mailLocalAddress'.

Create a new domain called 'Members'. Then go to 'LDAP Configuration', click the 'Configure LDAP +' button, and add a new LDAP config linked to the domain you just created.

ceo handles the creation of CloudStack accounts, so create an API key + secret token and add it to /etc/csc/ceod.ini on biloba.

Templates

This deserves an entire page of its own - see CloudStack Templates.

Kubernetes

This deserves an entire page of its own - see Kubernetes.

Upgrading CloudStack

Please be extremely careful if you decide to upgrade CloudStack. The last time I tried to perform an upgrade (from 4.15 to 4.16), the agents refused to connect to the management servers (or maybe it was the other way around?), and I ended up having to wipe the entire CloudStack installation clean and start again from scratch. Therefore it is fair to say that nobody has ever managed to successfully upgrade CloudStack on our machines. Do this at your own risk.

If you decide to perform an upgrade, then at the very least, you will need to backup the MariaDB databases ('cloud' and 'cloud_usage'), as well as the /etc/cloudstack and /var/lib/cloudstack folders on each of biloba, chamomile and ginkgo. Also, good luck.

Siracha Crash Out Notes

If you ever find your self in the unfourtunate position of needing to recover from a drive failure. It's JOEVER BRO TRUST ME.

Anyways this is what I've learned from the pits of hell

- Our nginx config is funny, and it redirects 9987 → gunicorn → ceod instance running on server

- If the drive dies, ask someone with a backup for just a zip file, it's not possible to recover. Trust me

- you need to reload both nginx & cloudstack to get it running

- You need the crt to get it working (stored under `/etc/csc/k8s` (I think)

Crash Out Notes Cont.

The user console on CloudStack was not working.

In Chamomile /etc/nginx/sites-enabled, you can see a list of all user websites that are enabled. There's a file entitled consoleproxy.cloud.csclub.uwaterloo.ca in which you will find that the console proxy is being hosted on the VM with IP 172.19.134.59 on CloudStack.

Run the following command whilst in Chamomile to access the console proxy:

sudo ssh -i /var/lib/cloudstack/management/.ssh/id_rsa -p 3922 169.254.35.150

More information on troubleshooting the console and console proxy can be found here.